Roman Yampolskiy博士第二部分的核心论点是:如果开发者真正理解了生存风险,自我保护的本能就会起作用——尤其是对那些领导项目的年轻富有的精英们。他建议我们应该专注于开发有益的狭义AI,而不是竞相冲向超级智能。

他逐一反驳了常见的反对意见:

-

关于“拔电源”:他认为这和核武器有本质区别。核武器是受控的工具,而超级智能是自主的智能体,无法被简单“关闭”。

-

关于“控制问题”:他对此深表怀疑,认为实现对超级智能的永久控制是一个“不可能解决的问题”。

-

关于“冒险心态”:他觉得在存在哪怕1%的人类灭绝风险下,仍然继续开发是极度不理性的,就像为了一笔巨款去喝一杯有1%几率致命的毒药。

对于解决方案,他对立法和建立“监控星球”的设想都不乐观。他主要的希望在于通过“个人利益”来说服当权者——告诉他们,一旦出事,他们自己也会灭亡。

对于我们普通人,他的建议是:

-

行动上:可以支持像“Pause AI”这样的组织,尝试施加公众影响。

-

生活上:鉴于未来的不确定性,我们应该把每一天都当作最后一天来活,避免长时间做讨厌的事,多去做有趣、有意义的事,帮助他人,活出最好的自己。

最后,他再次强调了他坚信的“模拟理论”。他认为我们目前AI和VR的飞速发展,恰恰是未来能够创造出以假乱真的模拟世界的前提,而这反过来也增加了我们本身就生活在一个模拟世界中的可能性。

Dr. Roman Yampolskiy:So some people think that we can enhance human minds, 所以有些人认为我们可以增强人脑,

——>> either through combination with hardwares or something like neuralink, 要么通过与硬件结合,比如Neuralink,

——>> or through genetically engineering to where we make smarter humans, 要么通过基因工程制造更聪明的人类,

——>> it may give us a little more intelligence. 这可能会给我们多一点智能。

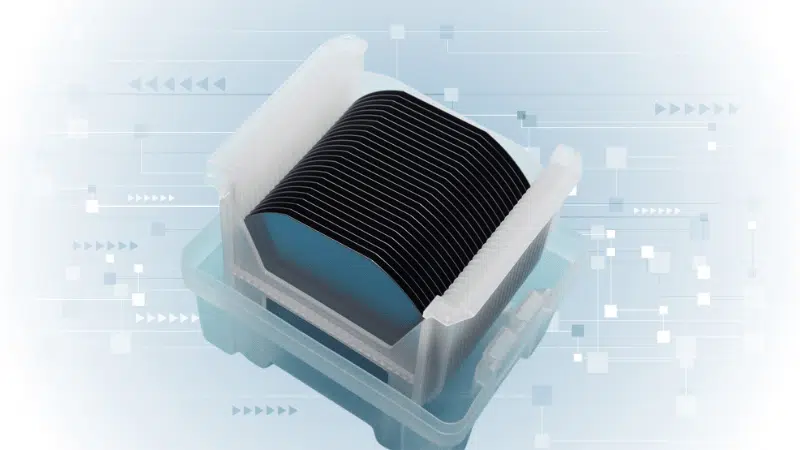

——>> I don’t think we’re still competitive in biological form with silicon form 我认为我们在生物形态上仍然无法与硅基形态竞争

——>> silicon substrate [‘sʌbstreɪt] is much more capable for intelligence, 硅基底对于智能来说能力更强,

“硅衬底” 是指一块薄的 硅片,作为构建电子电路、芯片和半导体的 基础材料。它提供了一个物理平台,用于在其上制造电子元件层(如晶体管)。打个比方来理解:硅衬底之于芯片,就像地基之于房子。

——>> it’s faster, it’s more resilient, 它更快、更坚韧,

——>> more energy-efficient in many ways. 在许多方面更节能。

Host:Which is what computers are made out of exactly the brain 硅基正是计算机的制造材料,而大脑…

Dr. Roman Yampolskiy:yeah. 是的。

——>> So I don’t think we can keep up just with improving our biology, 所以我认为仅仅通过改善我们的生物学是无法跟上步伐的,

——>> some people think maybe and this is very speculative, 有些人认为,也许——这是非常推测性的,

——>> we can upload our minds into computers, 我们可以将思维上传到计算机中,

——>> so scan your brain, connect comb of your brain, 扫描你的大脑,连接你的大脑组合,

——>> and have a simulation running on a computer 在计算机上运行一个模拟

——>> and you can speed it up give it more capabilities, 你可以加速它,赋予它更多能力,

——>> but to me that feels like you no longer exist, 但对我来说,那感觉像是你不再存在了,

——>> we just created software by different means, 我们只是通过不同的方式创造了软件,

——>> and now you have AI based biology and AI based some other forms of training, 现在你有了基于生物学的AI和基于其他训练形式的AI,

——>> you can have evolutionary algorithms [‘ælɡərɪðəm], 你可以使用进化算法,

——>> you can have many paths to reach AGI. 你可以有许多途径来实现AGI。

——>> But at the end, none of them are humans. 但最终,它们都不是人类。

Host:I have another date here, which is 2030, 我这里还有另一个日期,2030年,

——>> what’s your prediction for 2030, 你对2030年的预测是什么?

——>> what will the world look like? 世界会是什么样子?

Dr. Roman Yampolskiy:So, we probably will have humanoid robots with enough flexibility, 所以,我们很可能将拥有具备足够灵活性、

——>> dexterity, to compete with humans in all domains 敏捷性的人形机器人,可以在所有领域与人类竞争,

——>> including plumbers, we can make artificial plumbers. 包括管道工,我们可以制造人造管道工。

Host:Not the plumbers, where that was… that felt 管道工,这感觉像是……

——>> like the last bastion [‘bæstiən] of human employment. 人类就业的最后堡垒。

Dr. Roman Yampolskiy:So 2030, five years from now, 所以到2030年,也就是五年后,

——>> humanoid robots, so many of the companies, the leading companies, 人形机器人……包括特斯拉在内的许多领先公司

——>> including Tesla are developing humanoid robots at light speed 都在以极快的速度开发人形机器人,

——>> and they’re getting increasingly more effective 它们正变得越来越有效

——>> and these humanoid robots will be able to move through physical space 这些人形机器人将能够在物理空间中移动,

——>> you know, make an omelette [‘ɒmlət], do anything humans can do, 你知道,做煎蛋卷,做任何人类能做的事情,

——>> but obviously have be connected to AI as well. 但显然也能连接到AI。

Host:so they can think, talk… right. 所以它们能思考、说话……对吧。

Dr. Roman Yampolskiy:They’re controlled by AIs, they’re always connected to the networks, 它们由AI控制,始终连接到网络,

——>> so they’re already dominating in many ways. 所以它们已经在很多方面占据主导地位了。

——>> Our world will look remarkably different 我们的世界将变得截然不同

——>> when humanoid robots are functional and effective, 当人形机器人功能完备且有效时,

——>> because that’s really when you know, I have something quite… like 因为这确立像当你知道自己拥有某些特别的……

——>> the combination of intelligence and physical ability 智能和物理能力结合在一起时,

——>> is really really doesn’t leave much, does it for us…human beings. 留给我们的……人类的空间真的不多了,是吧?

Host:Not much, so today if you have intelligence through internet, 确实不多了。所以今天,如果你通过互联网拥有智能,

——>> you can hire humans to do your bidding for you, 你可以雇佣人类为你服务,

Do one’s bidding:it means to carry out someone’s orders, wishes, or commands, often implying obedience or service. It can sound formal, sometimes with a hint of irony or criticism, as if the person being asked has little choice but to comply. (听从某人的吩咐、命令或要求去做事,常常带有一种正式或略带讽刺的语气,好像执行者没有太多选择,只能顺从。)

——>> you can pay them in bitcoin, so you can have bodies just not directly controlling them. 你可以用比特币支付给他们,所以你只是不能直接控制他们的身体。

——>> so it’s not a huge game changer to add direct control of physical bodies. 所以增加对物理身体的直接控制并不是一个巨大的改变。

——>> Intelligence is where it’s at. The important component is definitely higher ability 智能才是关键所在。重要的组成部分绝对是更高的能力,

——>> to optimize, to solve problems, to find patterns people cannot see. 去优化、解决问题、发现人们看不到的模式。

Host :And then by 2045, 然后到2045年,

——>> I guess the world looks even, even more um… 我猜世界看起来更加,更加呃……

——>> which is 20 years from now. 那是20年后。

——>> So if it’s still around. –If it’s still around. 所以如果世界还存在的话。——如果世界还存在的话。

Dr. Roman Yampolskiy:Ray Kurzweil predicts that that’s the year for a singularity, 雷·库兹韦尔预测2045年是奇点年,

雷·库兹韦尔(1948年生)是 美国的发明家、未来学家和作家,因在人工智能、语音识别以及超人类主义领域的研究而知名。他最著名的观点之一就是关于 技术奇点 的预测——即人工智能将超越人类智能的时间点。

——>> that’s the year where progress becomes so fast 那一年,进步将变得非常之快,

——>> so this AI doing science and engineering work makes improvements so quickly, 这个从事科学和工程工作的AI使得改进发生得如此迅速,

——>> we cannot keep up anymore. 我们无法再跟上。

——>> That’s the definition of singularity. 这就是奇点的定义。

——>> Point beyond which we cannot see, understand, predict. 那个我们无法看见、理解和预测的时刻。

Host:See, understand and predict the intelligence itself, or? 无法看见、理解和预测智能本身,还是?

Dr. Roman Yampolskiy:What is happening in the world, 世界上正在发生的事情,

——>> the technology is being developed, so right now, 技术正在被开发。就像现在,

——>> if I have an iPhone, I can look forward to a new one coming out next year, 如果我有一部iPhone,我可以期待明年出新款,

——>> and I’ll understand it has slightly better camera 我会知道它有稍微好一点的摄像头。

——>> imagine now this process of researching and developing, 想象一下,现在研究和开发这款手机的过程

——>> this phone is automated, it happens every six months, 被自动化了,它每六个月、

——>> every three months, every month, week, day, hour, minute, second, 每三个月、每个月、每周、每天、每小时、每分钟、每秒钟都在发生。

——>> you cannot keep up with 30 iterations [ˌɪtə’reɪʃn] of iPhone in one day, 你无法在一天内跟上iPhone的30次迭代,

——>> you don’t understand what capabilities it has, what proper controls are, 你不理解它有什么能力,适当的控制措施是什么,

——>> it just escapes you. Right now, it’s hard for any researcher in AI 它完全超出了你的理解范围。现在,任何AI研究人员

——>> to keep up with the state-of-the-art, 都很难跟上最先进的技术。

——>> while I was doing this interview with you, 就在我和你做这次采访的时候,

——>> a new model came out and I’ll no longer know what 一个新模型发布了,我将不再知道

——>> the state-of-the-art is, every day 什么是最新技术。每一天,

——>> as a percentage of total knowledge, I get dumber. 作为总知识量的百分比,我都变得更笨。

——>> I may still know more, 我可能仍然知道得更多,

——>> because I keep reading but as a percentage of overall knowledge 因为我一直在阅读,但作为整体知识的百分比,

——>> we’re all getting dumber. 我们都在变得更笨。

——>> And then you take it to extreme values, you have zero knowledge, 然后你把它推到极端值,你对周围世界的

——>> zero understanding of the world around you. 知识和理解为零。

Host:Some of the arguments against this eventuality [ɪˌventʃu’æləti] 反对这种可能性的论据之一是,

——>> are that when you look at other technologies, 当你看看其他技术,

——>> like the industrial revolution, 比如工业革命,

——>> people just found new ways to work 人们只是找到了新的工作方式,

——>> and new careers that we could never have imagined at the time were created, 创造了我们当时无法想象的新职业,

——>> how do you respond to that in a world of superintelligence? 在超级智能的世界里,你如何回应这一点?

Dr. Roman Yampolskiy:It’s a paradigm shift, we always had tools, new tools, 这是一次范式转变。我们以前总是有工具,新工具,

——>> which allowed some job to be done more efficiently, 可以让某些工作更高效地完成,

——>> so instead of having 10 workers, 所以你不需要10个工人,

——>> you could have two workers, 只需要2个,

——>> and eight workers had to find a new job. 另外8个工人必须去找新工作。

——>> And there was another job, now you can supervise these workers, 而且总有新工作出现,现在你可以去管理这些工人,

——>> so do something cool, if you’re creating a meta invention, 或者做些更酷的事。但如果你在创造一个元发明,

关于自身 / 自我指涉(如 metafiction = 关于小说的小说)。

超越 / 在……之上(如 metaphysics = 超越物理学)。

更高阶 / 总体性的(如 metacognition = 对思维的思维)。

——>> you’re inventing intelligence, you’re inventing a worker, an agent, 你在发明智能本身,你在发明一个工人、一个智能体,

——>> then you can apply that agent to the new job, 那么你就可以将这个智能体应用到任何新工作上,

——>> there is not a job which cannot be automated, that never happened before, 从来没有一种工作是无法被自动化的,这是前所未有的情况,

——>> all the inventions we previously had were kind of a tool for doing something, 我们之前所有的发明都只是完成某事的工具,

——>> so we invented fire, huge game changer, 我们发明了火,这是巨大的改变,

——>> but that’s it, it stops with fire, we invent a wheel, same idea, 但也就到此为止了。我们发明了轮子,也是同样的道理,

——>> huge implications. But wheel itself is not an inventor. 影响巨大。但轮子本身不是发明家。

Implications refer to the possible effects, consequences, or meanings that are suggested by something but not directly stated. (某事可能产生的影响、后果,或是未直接说明却隐含的意义。)

✅ Example Sentences

Daily Conversation:

If you skip the meeting, the implication is that you don’t really care about the project.

如果你缺席会议,这就意味着——你并不真正关心这个项目。When she smiled at him, he immediately thought there were romantic implications.

她对他微笑时,他立刻联想到可能暗含浪漫的意味。Business Related:

3. The new policy has serious financial implications for small businesses.

新政策对小型企业有严重的财政影响。

Before signing the contract, we need to consider all the legal implications.

在签署合同之前,我们需要考虑所有法律上的后果。

——>> Here we’re inventing a replacement for human mind, 而我们现在正在发明的是人类思维的替代品,

——>> a new inventor capable of doing new inventions, 一个能够进行新发明的新发明家,

——>> it’s the last invention we ever have to make, at that point, 这是我们不得不进行的最后一项发明。在那一刻,

——>> it takes over the process of doing science research 它将接管一切,甚至包括科学研究、

——>> even ethics research, morals, all that is automated at that point. 伦理研究、道德研究等过程,在那个时候都将被自动化。

Host:Do you sleep well at night? 你晚上睡得好吗?

Dr. Roman Yampolskiy:Really well. 睡得非常好。

Host:Even though you, you spent the last 15, 20 years of your life 尽管你过去15、20年的人生

——>> working on AI safety and it’s suddenly among us in a way 都在研究AI安全,而它现在以一种

——>> that I don’t think anyone could have predicted five years ago. 我认为五年前没人能预料的方式出现在我们身边。

——>> I want to say among us, I really mean that the amount of funding and talent 我的意思是,现在投入到更快实现超级智能上的

——>> that is now focused on reaching superintelligence 资金和人才规模,

——>> faster has made it feel more inevitable and more soon than 让它感觉比我们任何人想象的

——>> any of us could have possibly imagined. 都更不可避免、更迫在眉睫。

Dr. Roman Yampolskiy:We as humans have this built-in bias about 我们人类有一种固有的偏见, (inherent bias → “固有的偏见” intrinsic bias → “内在的偏见” innate bias → “天生的偏见”)

——>> not thinking about really bad outcomes and things we cannot prevent, 就是不去思考那些我们无法阻止的、真正糟糕的结局,

——>> so all of us are dying, 我们所有人都在走向死亡,

——>> your kids are dying, 你的孩子正在走向死亡,

——>> your parents are dying, everyone’s dying, 你的父母正在走向死亡,每个人都在走向死亡,

——>> but you still sleep well, 但你依然睡得很好,

——>> you still go on with your day, 依然过好每一天,

——>> even 95 year olds are still doing games and playing golf and whatnot, 即使是95岁的老人也还在玩游戏、打高尔夫等等,

Whatnot is an informal word used to mean “other things of a similar kind” or “etcetera.” It often appears at the end of a list when the speaker doesn’t want to list everything in detail. (一种非正式表达,用来指“诸如此类的东西”或“等等”。常出现在列举的末尾,表示还有类似的东西,但说话人不想一一列举。 So on and so forth, the list goes on…)

Example Sentences

Daily Conversation

We went to the park, had some snacks, played games, and whatnot.

我们去了公园,吃了些零食,玩了游戏之类的。She’s always busy with shopping, cleaning, cooking, and whatnot.

她总是忙于购物、打扫、做饭之类的事情。Business Context

3. The new software will help us manage documents, track expenses, schedule meetings, and whatnot.

这款新软件能帮助我们管理文件、追踪开销、安排会议之类的。

During the merger, there were a lot of legal papers, compliance checks, financial audits, and whatnot.

在合并过程中,有大量的法律文件、合规检查、财务审计之类的。

——>> because we have this ability to not think about the worst outcomes, 因为我们有这种不去思考最坏结果的能力,

——>> especially if we cannot actually modify the outcome, 尤其是当我们实际上无法改变结果时,

——>> so that’s the same infrastructure being used for this, yeah! 所以应对AI风险时,我们利用的也是同样的底层逻辑。是的!(原意:基础设施、底层架构)

——>> There is humanity level death-like event, 可能会发生人类级别的灭绝性事件,

——>> we’re happening to be close to it probably, 我们可能正好离它很近,

——>> but unless I can do something about it, 但除非我能做些什么,

——>> I can just keep enjoying my life, in fact maybe knowing 否则我大可以继续享受我的生活。事实上,知道自己的时间有限,

——>> that you have limited amount of time left 或许更能让你有理由

——>> gives you more reason to have a better life, you cannot waste any. 去更好地生活,你不能浪费一分一秒。

——>> And that’s the survival trait of evolution I guess, 我猜这也是进化留下的生存特质,

——>> because those of my ancestors that spent all their time worrying 因为我那些整天忧心忡忡的祖先,

——>> wouldn’t have spent enough time having babies 就没有足够的时间去生儿育女

——>> and hunting to survive… 和狩猎来生存……

——>> suicidal ideation [ˌaɪdi’eɪʃn]! 这算是某种”自杀意念”!

——>> People who really start thinking about how horrible the world is 那些真正开始思考世界有多可怕的人,

——>> usually escape pretty soon. 通常很快就会选择逃避(生命)。

Host:One of the… you co-authored this paper, 其中……你合著了一篇论文,

——>> analyzing the key arguments people make against the importance of AI safety, 分析了人们反对AI安全重要性的主要论点,

——>> and one of the arguments in there is 其中一个论点是,

——>> that there’s other things that are of bigger importance right now, 眼下有其他更重要的事情,

——>> it might be world wars, it could be nuclear containment, 可能是世界大战,可能是防止核扩散,

——>> it could be other things, 可能是其他事情,

——>> there’s other things that the governments and 政府和像我这样的媒体人

——>> broadcasters like me should be talking about that are more important, 应该讨论更重要的议题,

——>> what’s your rebuttal to that argument? 你对这个论点有何反驳?

Dr. Roman Yampolskiy:So, super intelligence is the meta solution, 所以,超级智能是元解决方案,

——>> if we get super intelligence right, 如果我们能正确掌握超级智能,

——>> it will help us with climate change, 它将帮助我们应对气候变化,

——>> it will help us with wars, it can solve all the other existential risks. 它将帮助我们解决战争,它可以解决所有其他存在性风险。

——>> If we don’t get it right, 如果我们没能正确掌握它,

——>> it dominates, if climate change will take a hundred years to boil us alive 那么它将成为主导。如果气候变化需要一百年才能把我们活活煮死,

——>> and super intelligence kills everyone in five. 而超级智能在五年内就能杀死所有人。

——>> I don’t have to worry about climate change, so either way, 那我就不必担心气候变化了。所以,要么

——>> either it solves it for me, or it’s not an issue. 它为我解决了问题,要么问题本身就不再是问题了。

Host:so you think it’s the most important thing to be working on? 所以你认为这是当前最需要致力解决的问题?

Dr. Roman Yampolskiy:Without question there is nothing more important than getting this right. 毫无疑问,没有什么比正确解决这个问题更重要了。

——>> And I know everyone says it, you take any class, 我知道每个人都这么说,你上任何课,

——>> but you take English professor’s class, 比如你上英语教授的课,

——>> and he tells you this is the most important class you’ll ever take, 他也会告诉你这是你上过的最重要的课,

——>> but you can see the meta level differences with this one. 但你可以看到这个问题在元层面上的不同。

Host:Another argument in that paper is that we all be in control, 那篇论文里的另一个论点是,我们总能控制,

——>> and that the danger is not AI, 危险不在于AI,

——>> this particular argument asserts that AI is just a tool, 这个论点声称AI只是一种工具,

——>> humans are the real actors that present danger, 人类才是真正的危险源,

——>> and we can always maintain control by simply turning it off. 我们总能通过直接关掉它来保持控制。

——>> Can’t we just pull the plug out? 我们就不能直接把插头拔掉吗?

——>> I see that every time we have a conversation on the show about AI, 每次我们在节目里讨论AI,

——>> someone says can’t we just unplug it. 总有人说”就不能拔掉电源吗”。

Dr. Roman Yampolskiy:Yeah, I get those comments on every podcast I make 是的,我做的每个播客下面都有这种评论,

——>> and I always want to like, get in touch with a guy 我总想联系那个人,

——>> and say this is Brilliant, 说”这太棒了!

——>> I never thought of it, 我从来没想到过!

——>> we’re gonna write a paper together and get a noble prize for it, 我们一起写篇论文得诺贝尔奖吧!

——>> this is like let’s do it! 就这么干!”

——>> Because it’s so silly like, can you turn off a virus, 因为这太可笑了。你能关掉一个病毒吗?

——>> you have a computer virus, you don’t like it, turn it off! How about Bitcoin? 你有个电脑病毒,你不喜欢它,关掉它!比特币呢?

——>> Turn off Bitcoin network! 关掉比特币网络!

——>> Go ahead, I’ll wait! This is silly, 你试试看,我等着!这很 silly,

——>> those are distributed systems, 这些都是分布式系统,

——>> you cannot turn them off and on top of it, 你无法关闭它们。更重要的是,

——>> they’re smarter than you, they made multiple backups, 它们比你更聪明,它们做了多重备份,

——>> they predicted what you’re going to do, 它们预测了你要做什么,

——>> they will turn you off before you can turn them off. 它们会在你关掉它们之前先关掉你。

——>> The idea that we will be in control applies only to pre super intelligence levels , “我们能控制”的想法只适用于超级智能出现之前的水平,

——>> basically what we have today. 基本上就是我们今天拥有的东西。

——>> Today humans with AI tools are dangerous, they can be hackers, 今天,拥有AI工具的人类是危险的,他们可以是黑客,

——>> malevolent [mə’levələnt] actors, absolutely. 可以是恶意行为者,绝对如此。

——>> But the moment super intelligence become smarter dominates, 但一旦超级智能变得比我们更聪明、占据主导地位,

——>> they no longer have an important part of that equation, 人类在这个等式中就不再是重要部分了,

——>> it is the higher intelligence I’m concerned about, 我担心的是那个更高的智能,

——>> not the human who may add additional malevolent payload, 而不是可能附加恶意指令、

——>> but at the end still doesn’t control it. 但最终仍无法控制它的人类。

Host:It is tempting 接下来一个诱人的论点是,

——>> to follow the next argument that I saw in that paper, 我在那篇论文里看到的,

——>> which basically says, listen, this is inevitable, 它基本上是说,听着,这既然是不可避免的,

——>> so there’s no point fighting against it, 反抗也就没有意义了,

——>> because there’s really no hope here. 因为根本没有希望。

——>> So we should probably give up even trying, 所以我们或许应该放弃尝试,

——>> and be faithful that it will work itself out. 并相信船到桥头自然直。

——>> Because everything you’ve said sounds really inevitable, 因为你所说的一切听起来真的不可避免,

——>> and if with… with China working on it, 而且……中国在研发,

——>> I’m sure Putin’s got some secret division, 我相信普京有秘密部门,

——>> I’m sure Iran are doing some bits and pieces, 伊朗也在做些零碎工作, (Odds and ends)

——>> every European country’s trying to get ahead of AI, 每个欧洲国家都想在AI上领先,

——>> the United States is leading the way. 美国正领跑。

——>> So it’s inevitable, 所以这是不可避免的,

——>> so we probably should just have faith and pray? 我们或许应该只是抱有信念并祈祷?

Dr. Roman Yampolskiy:Praying is always good, 祈祷总是好的,

——>> but incentives matter, if you are looking at what drives these people, 但激励很重要。如果你看看驱动这些人的是什么,

——>> so yes, money is important, 是的,金钱很重要,

——>> so there is a lot of money in that space 所以这个领域有很多钱,

——>> and so everyone’s trying to be there and develop this technology, 所以每个人都想参与并开发这项技术,

——>> but if they truly understand the argument, 但如果他们真正理解这个论点,

——>> they understand that you will be dead, 明白自己会死,

——>> no amount of money will be useful to you, then incentives switch, 再多的钱对他们也没用,那么激励就会改变,

Dr. Roman Yampolskiy:they would want to not be dead, a lot of them are young people, 他们会不想死,他们中很多是年轻人,

——>> rich people, they have their whole lives ahead of them. 富人,他们还有整个人生在前面。

——>> I think they would be better off not building advanced superintelligence, 我认为他们不建造先进的超级智能会过得更好,

——>> concentrating on narrow AI tools for solving specific problems. 专注于用狭义AI工具解决特定问题。

——>> Okay, my company cures breast cancer, 好吧,我的公司只专注于治愈乳腺癌,

——>> that’s all! We make billions of dollars, 就这样!我们赚几十亿美元,

——>> everyone’s happy, everyone benefits, it’s a win. We are still in control today, 每个人都开心,每个人都受益,这是双赢。我们今天仍然掌握着控制权,

——>> it’s not over until it’s over, we can decide not to build general superintelligences. 在结束之前都不算结束,我们可以决定不建造通用的超级智能。

Host:I mean the United States might be able to conjure up enough enthusiasm for that, 但美国或许能凝聚起足够的热情这么做,

Conjure up:to bring to mind vividly, to imagine, or to make something appear as if by magic. It can refer to both literal magical summoning and figurative recall of images, emotions, or ideas. (令人脑海中浮现、想象出,或像魔法般召唤出现。它既可以用来形容神奇的“召唤”,也常用来指唤起某种记忆、形象或情感。 近认词:Summon up, evoke up)

The smell of fresh bread conjures up memories of my grandmother’s kitchen.

新鲜面包的香味让我立刻想起了祖母的厨房。That horror movie conjured up nightmares for me last night.

那部恐怖电影昨晚让我做了噩梦。3. The marketing team managed to conjure up a brilliant slogan that captured everyone’s attention.

市场团队设法想出了一个极具吸引力的口号,吸引了所有人的注意。- Investors want to know if the company can conjure up innovative ideas to stay ahead of competitors.

投资者想知道公司是否能想出创新的点子,以保持领先竞争对手。🔹 1. Conjure up

语感: 带有一点“魔法感”或“瞬间浮现”,强调画面感或神奇感。常用于记忆、画面、想象、创意。

The smell of rain conjured up childhood summers in the countryside.

雨的味道让我瞬间浮现出童年在乡下的夏天。

🔹 2. Call up

语感: 偏日常、口语,意思是“使人想起、回忆起”,比 conjure up 更普通、少了“神奇/生动”的意味。

Her voice called up memories of our college days.

她的声音让我想起了大学时代。

🔹 3. Evoke

语感: 正式书面语,常用于文学、心理、艺术评论中,强调“激起情感/反应”。有点学术感。

The painting evokes a sense of loneliness and fragility.

这幅画唤起了一种孤独与脆弱的感觉。

🔹 4. Summon up

语感: 更侧重“鼓起、召唤出某种力量或勇气”,多用在精神、情感、意志方面。强调主动努力去调动。

She had to summon up all her courage to give that speech.

她必须鼓起全部勇气来发表那次演讲。

——>> but if the United States doesn’t build general superintelligences, 但如果美国不建造通用超级智能,

——>> then China are going to have the big advantage, right? 那么中国就会拥有巨大优势,对吧?

Dr. Roman Yampolskiy:So right now, at those levels, 所以目前,在这个水平上,

——>> whoever has more advanced AI, has more advanced military, 谁拥有更先进的AI,谁就拥有更先进的军事力量,

——>> no question, we see it with existing conflicts, 这是毫无疑问的,我们从现有的冲突中就能看到,

——>> but the moment you switch to superintelligence, 但一旦你转向超级智能,

——>> and control superintelligence, 并且是控制着超级智能,

——>> it doesn’t matter who builds it, us or them, 谁建造了它就不重要了,是我们还是他们,

——>> and if they understand this argument, 如果他们理解这个论点,

——>> they also would not build it, 他们也不会去建造它,

——>> it’s a mutually assured destruction on both ends. 这就像是双方相互确保毁灭。

Host:Is this technology different than, say, nuclear weapons, 这项技术是否不同于,比如说,核武器?

——>> which require a huge amount of investment 制造核武器需要巨额投资,

——>> and you have to enrich the uranium [ju’reɪniəm] and 你需要浓缩铀,

——>> you need billions of dollars potentially to even build a nuclear weapon? 甚至可能需要数十亿美元才能造出一件核武器?

——>> But it feels like this technology is much cheaper to get to superintelligence, 但感觉上,实现超级智能这项技术要便宜得多,

——>> potentially or at least it will become cheaper? 或者至少会变得越来越便宜?

——>> I wonder if it’s possible that some, some guy, some startup is going to 我在想,有没有可能某个人、某个初创公司,

——>> be able to build superintelligence in, you know, a couple of years 能在几年内造出超级智能,

——>> without the need of billions of dollars of compute or electricity power. 而不需要数十亿美元的计算能力或电力。

Dr. Roman Yampolskiy:That’s a great point, so every year it becomes cheaper 说得好。每年训练足够大的模型的成本

——>> and cheaper to train sufficiently large model, 都在变得越来越低,

——>> if today it would take a trillion dollars to build super intelligence, 如果今天建造超级智能需要一万亿美元,

——>> next year it could be a hundred billion and so on, at some point, 明年可能就只要一千亿,依此类推。到某个时候,

——>> a guy in a laptop could do it. 一个人用笔记本电脑就能做到。

——>> But you don’t want to wait four years for making it affordable, 但(大公司)不想等四年让它变得便宜,

——>> so that’s why so much money is pouring in, 所以才有这么多资金涌入,

——>> somebody wants to get there this year and lock in all the winnings, 有人想今年就达到目标,锁定所有收益,

——>> lightcone level award, so in that regard, 赢得”光锥级”的奖项。在这方面,

The phrase “lightcone level award” is not a standard technical term but rather a metaphorical expression. It borrows imagery from physics:

Lightcone: In relativity, a lightcone represents the boundary of all possible events that can be influenced by, or can influence, a given point in spacetime. In AI discussions, the term is used figuratively to suggest a critical future milestone — a point beyond which history may unfold differently depending on who reaches it first.

Level award: Here, it doesn’t mean a literal “prize” but instead refers to the huge payoff or advantage one receives for being the first to achieve that milestone.

So, when someone says “lightcone level award”, they are pointing to the idea that:

👉 Whoever reaches that game-changing AI milestone first will “lock in” extraordinary benefits — money, influence, control — in a way that strongly resembles a winner-takes-all outcome.这个短语并不是一个标准的技术术语,而是一个隐喻性的表达。它借用了物理学中的意象:

光锥(lightcone):在相对论中,光锥表示某一时空点能影响到或被影响到的所有可能事件的边界。在 AI 的讨论里,这个词被比喻为一个关键的未来里程碑——一旦被率先实现,历史的走向可能会彻底不同。

Level award:这里并不指字面意义上的“奖项”,而是指率先实现该里程碑所带来的巨大回报或优势。

因此,当人们说 “lightcone level award” 时,他们的意思是:

👉 谁要是第一个实现了那个改变游戏规则的 AI 里程碑,就能“锁定”巨大的收益——金钱、影响力、控制权——其性质很像一种 赢家通吃(winner-takes-all) 的结果。

——>> they bought very expensive projects, 他们投入的是非常昂贵的项目,

——>> like Manhattan level projects, 像曼哈顿计划那种级别的项目,

——>> which is the nuclear bomb project. –Right. 也就是造原子弹的计划。——对。

——>> The difference between the two technologies 这两种技术的区别在于,

——>> is that nuclear weapons are still tools, some dictator, 核武器仍然是工具,需要某个独裁者,

——>> some country, someone has to decide to use them, deploy them, 某个国家、某个人决定使用它们、部署它们。

——>> whereas super intelligence is not a tool, 而超级智能不是工具,

——>> it’s an agent, it makes its own decisions, 它是一个智能体,它自己做决定,

——>> and no one is controlling, it cannot take out his dictator, 没有人能控制它。你无法干掉那个独裁者,

——>> and now superintelligence is safe. 然后超级智能就安全了。

——>> So that’s a fundamental difference to me. 所以这对我来说是一个根本性的区别。

Host:But if you’re saying that 但如果你说

——>> it is going to get incrementally [ˌɪŋkrə’mentlli] cheaper, 它会逐渐变得更便宜,

——>> like I think it’s moore’s law, isn’t it? That technology gets cheaper, 像是摩尔定律对吧?技术变得越来越便宜,

——>> then there is a future where some guy on his laptop 那么未来就会出现这样的情况:某个人用他的笔记本电脑

——>> is going to be able to create superintelligence 就能创造出超级智能,

——>> without oversight or regulation or employees, etc. 而没有监督、监管或员工等等。

Dr. Roman Yampolskiy:Yeah, that’s why a lot of people suggesting 是的,所以很多人建议

——>> we need to build something like a 我们需要建造一个

——>> surveillance planet where you are monitoring who’s doing what, “监控星球”,监视每个人在做什么,

——>> and you’re trying to prevent people from doing it, 试图阻止人们这么做。

——>> do I think it’s feasible? No, at some point, 我认为这可行吗?不。到某个时候,

——>> it becomes so affordable and so trivial that it just will happen, 它会变得如此廉价和简单,以至于它终究会发生,

——>> but at this point, we’re trying to get more time, 但在目前,我们试图争取更多时间,

——>> we don’t want it to happen in five years, 我们不希望它在五年内发生,

——>> we want it to happen in 50 years. 我们希望它在50年内发生。

Host:I mean that’s not very hopeful, so… Depends on how old you are, 这听起来并不怎么充满希望,所以……这取决于你多大年纪。

Dr. Roman Yampolskiy: Depends on how old you are? 取决于你多大年纪?

Host:I mean if you’re saying that you believe in the future, 我的意思是,如果你相信未来,

——>> people will be able to make superintelligence 人们能够无需今天的资源

——>> without the resources that are required today, 就制造出超级智能,

——>> then it is just a matter of time. 那么这就只是时间问题。

Dr. Roman Yampolskiy:Yeah, but so will be true for many other technologies, 是的,但许多其他技术也是如此,

——>> we’re getting much better at synthetic [sɪn’θetɪk] biology, where today, 我们在合成生物学方面正变得越来越厉害,今天,

——>> someone with a bachelor’s degree in biology can probably create a new virus, 一个拥有生物学学士学位的人或许就能制造出一种新病毒,

——>> this will also become cheaper, other technologies are like that. 这也会变得更便宜,其他技术也是这样。

——>> So we are approaching a point where it’s very difficult to make sure 所以我们正在接近一个节点,确保

——>> no technological breakthrough is the last one. 某项技术突破不是最后一项,将变得非常困难。

——>> So essentially, in many directions, 所以本质上,在许多方向上,

——>> we have this pattern of making it easier in terms of resources, 我们都有这种模式,使得在资源和智能层面

——>> in terms of intelligence to destroy the world. 毁灭世界变得更容易。

——>> If you look at… I don’t know, 500 years ago, 看看……我不知道,500年前,

——>> the war’s dictator with all the resources could kill a couple million people, 一个拥有所有资源的战争独裁者可以杀死几百万人,

——>> he couldn’t destroy the world, now we know nuclear weapons, 但他无法摧毁世界。现在我们知道核武器

——>> we can blow up the whole planet multiple times over. 可以多次炸毁整个星球。

——>> Synthetic biology we saw with covid, 合成生物学,我们通过新冠看到了,

——>> you can very easily create a combination virus 你可以很容易地制造出一种组合病毒,

——>> which impacts billions of people, 影响数十亿人,

——>> and all those things becoming easier to do. 而所有这些事情都变得越来越容易做到。

Host:In the near term, you talk about extinction being a real risk, 在短期内,你谈到灭绝是一个真实的风险,

——>> human extinction being a real risk, of all the pathways to human extinction 人类灭绝是真实的风险,在你认为所有最可能的人类灭绝路径中,

——>> that you think are most likely, what is the leading pathway? 最主要的路径是什么?

——>> Because I know you talked about there being some issue, 因为我知道你谈到过一些问题,

——>> pre-deployment of these AI tools like, you know, 在部署这些AI工具之前,比如,

——>> someone makes a mistake when they’re designing a model or other issues, 有人设计模型时犯错或其他问题,

——>> post-deployment, when I say post-deployment, 或在部署之后,当我说部署之后,

——>> I mean once a ChatGPT or something, an agent’s released into the world, 我指的是一旦ChatGPT或某个智能体发布到世界后,

——>> and someone hacking into it and changing it, 有人黑入并改变它,

——>> and reprogramming it to be malicious, 重新编程使其变得恶意,

——>> have all these potential paths to human extinction, 在所有这些可能导致人类灭绝的路径中,

——>> which one do you think is the highest probability? 你认为哪个概率最高?

Dr. Roman Yampolskiy:So I can only talk about the ones I can predict myself, 我只能谈论我自己能预测的那些。

——>> so I can predict even before we get to superintelligence, 所以,我预测甚至在我们达到超级智能之前,

——>> someone will create a very advanced biological tool, create a novel virus, 就会有人制造出非常先进的生物工具,制造出一种新型病毒,

——>> and that virus gets everyone or almost everyone, I can envision it, 而这种病毒会感染所有人或几乎所有人,我可以预见到这一点,

——>> I can understand the pathway, I can say that. 我能理解这个路径,我可以这么说。

Host:So just to zoom in on that then, 那么具体来说,

——>> that would be using an AI to make a virus and then releasing it, 就是利用AI制造病毒然后释放它,

——>> and would that be intentional or? 是故意的吗?

Dr. Roman Yampolskiy:There is a lot of psychopaths [‘saɪkəpæθ], a lot of terrorists, a lot of doomsday [‘duːmzdeɪ] cults, 世界上有很多精神变态者、恐怖分子、末日邪教,

——>> we’ve seen historically again they tried to kill as many people as they can, 历史上我们看到他们一再试图杀死尽可能多的人,

——>> they usually fail, they kill hundreds of thousands, 他们通常失败了,杀死了几十万人,

——>> but if they get technology to kill millions of civilians [sɪ’vɪljən], they would do that gladly, 但如果他们掌握了能杀死数百万平民的技术,他们会很乐意这么做。

——>> the point I’m trying to emphasize 我想强调的重点是,

——>> is that it doesn’t matter what I can come up with, 我能想出什么并不重要,

——>> I’m not a malevolent actor you’re trying to defeat here, 我并不是你想要击败的恶意行为者,

——>> it’s the super intelligence which can come up with completely novel ways of doing it. 超级智能才能想出全新的、我们想不到的方法。

——>> again, you brought up example of your dog, 再次用你的狗举例,

——>> your dog cannot understand all the ways you can take it out, 你的狗无法理解所有你能干掉它的方法,

——>> it can maybe think you’ll bite it to death or something, 它可能以为你会咬死它之类的,

——>> but that’s all, whereas you have infinite supply of resources, 但也就这些了。而你拥有无限的资源,

——>> so if I asked your dog exactly how you’re going to take it out, 所以如果我问你的狗,你具体会怎么干掉它,

——>> it would not give you a meaningful answer, it can talk about biting, 它给不出有意义的答案,它只能说到咬,

——>> and this is what we know, we know viruses, 而这就是我们所知道的,我们知道病毒,

——>> we experienced viruses, we can talk about them, 我们经历过病毒,我们可以谈论它们,

——>> but what an AI system capable of doing novel physics research can come up with 但一个能够进行新颖物理学研究的AI系统能想出什么,

——>> is beyond me. 是超出我的认知范围的。

Host:One of the things that I think most people don’t understand is how little 我认为大多数人不太理解的一点是,我们对这些AI

——>> we understand about how these AIs are actually working. 实际如何工作的了解是多么的少。

——>> Because one would assume, you know, with computers, 因为人们会假设,对于计算机,

——>> we kind of understand how a computer works, we know that it’s doing this, 我们大概知道它是怎么工作的,我们知道它先做这个,

——>> and then this, and it’s running on code, 再做那个,它运行在代码上。

——>> but from reading your work, 但读了你的作品后,

——>> you describe it as being a black box, in the context of something like ChatGPT, 你将其描述为一个”黑箱”,在像ChatGPT这样的AI语境下,

A black box is something whose internal workings are hidden, unknown, or too complex to understand, but its inputs and outputs are visible. You know what goes in and what comes out, but you don’t necessarily know how it happens inside. (指某个系统或过程,其内部运作不可见、未知或复杂难懂,人们只能看到它的输入和输出,却无法完全了解它内部是如何实现的。Technology/AI → an algorithm or system where the decision-making process is not transparent).

——>> or an AI we know. 或我们知道的AI。

——>> You’re telling me that the people that have built that tool 你是说制造这些工具的人

——>> don’t actually know what’s going on inside there. 实际上也不知道里面发生了什么。

Dr. Roman Yampolskiy:That’s exactly right, so even people making those systems, 完全正确。即使是制造这些系统的人,

——>> have to run experiments on their product to learn what it’s capable of. 也不得不对他们的产品进行实验,以了解它有什么能力。

——>> So they train it by giving it all of data, let’s say all of internet text, 他们通过给它所有数据(比如所有互联网文本)来训练它,

——>> they run it on a lot of computers to learn patterns in that text, 他们在大量计算机上运行它以学习文本中的模式,

——>> and then we start experimenting with that model, oh, do you speak French? 然后我们开始试验这个模型:哦,你会说法语吗?

——>> Oh, can you do mathematics, oh, are you lying to me now? 哦,你会做数学吗?哦,你现在是在对我撒谎吗?

——>> And so maybe it takes a year to train it 所以,训练它可能需要一年,

——>> and then six months to get some fundamentals about what it’s capable of, 然后花六个月时间来了解它的一些基本能力,

——>> some safety overhead, 做一些安全评估,

——>> but we still discover new capabilities and old models, 但我们仍然会在旧模型中发现新的能力,

——>> if you ask the question in a different way, it becomes smarter. 如果你用不同的方式提问,它会变得更聪明。

——>> So it’s no longer engineering, 所以这不再是工程学,

——>> how it was the first 50 years where someone was a knowledge engineer 不像头50年那样,由知识工程师

——>> programming an expert system AI to do specific things, 编程专家系统AI来做特定的事情。

——>> it’s a science, we are creating this artifact, growing it, 这是一门科学。我们是在创造这个人工制品,培育它,

——>> it’s like an alien plant, and then we study it to see what it’s doing 就像一种外星植物,然后我们研究它,看它在做什么,

——>> and just like with plants, 就像对植物一样,

——>> we don’t have hundred percent accurate knowledge of biology, 我们对生物学没有百分之百准确的了解,

——>> we don’t have full knowledge here, 我们在这里也没有完全的知识,

——>> we kind of know some patterns, we know okay, 我们大概知道一些模式,我们知道如果增加计算能力,

——>> if we add more compute, it gets smarter most of the time, but, 它大多时候会变得更聪明,但是,

——>> nobody can tell you precisely 没人能精确地告诉你,

——>> what the outcome is going to be given a set of inputs. 给定一组输入,输出会是什么。

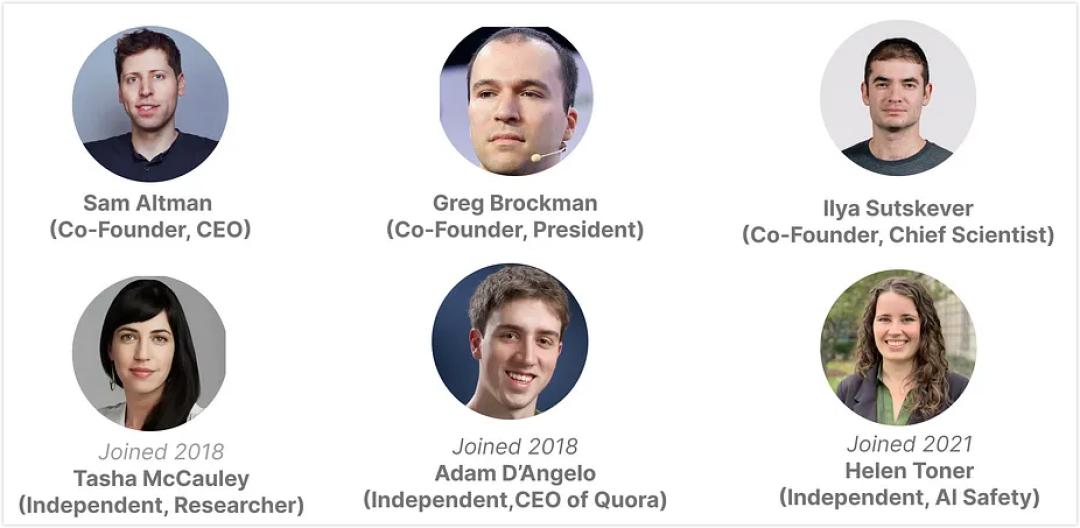

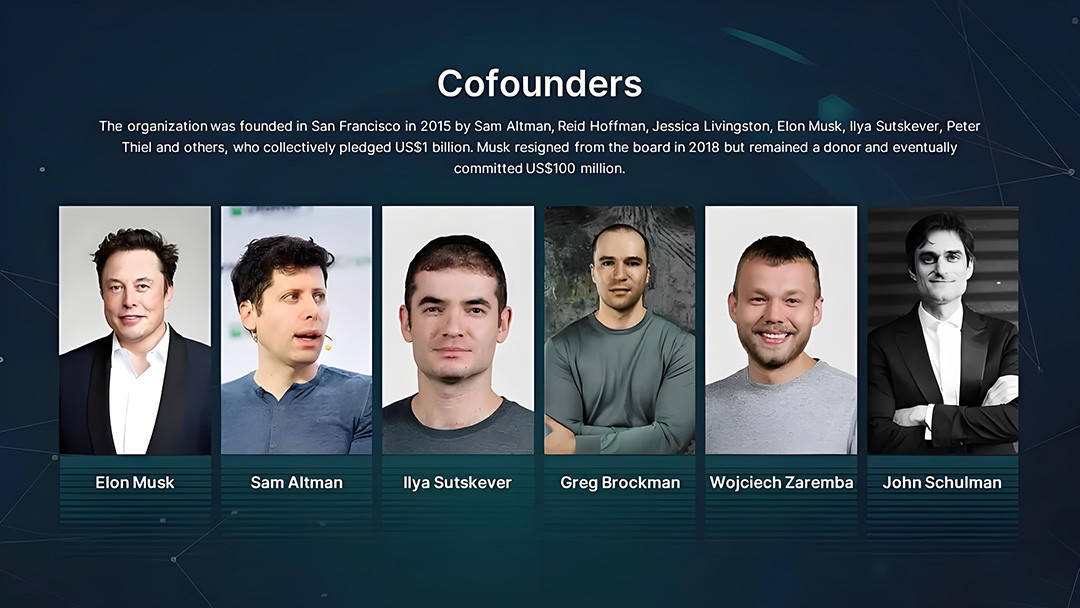

Host:What do you make of OpenAI and Sam Altman and what they’re doing? 你对OpenAI和Sam Altman以及他们在做的事情怎么看?

——>> And obviously you’re aware that one of the cofounders, was it… was it 显然你也知道,其中一位联合创始人,是……

——>> Ilya Sutskever 伊尔亚·苏茨克维吗?

Dr. Roman Yampolskiy Ilya, yeah, Ilya left and he started a new company called… Ilya,是的。Ilya离开了,并创办了一家新公司叫……

——>> Superintelligent safety “超级智能安全”(Superintelligent),

——>> because AI safety wasn’t challenging enough, 因为AI安全还不够有挑战性,

——>> he decided to just jump right to the hard problem. 他决定直接攻克这个难题。

Host:As an onlooker, when you see 作为一个旁观者,当你看到

——>> that people are leaving OpenAI to start superintelligent safety companies, 人们离开OpenAI去创办超级智能安全公司时,

——>> what was your read on that situation? 你对这种情况有什么看法?(What do you think of, how do you comment, what do you reckon= what do you think, what’s your take on…)

Dr. Roman Yampolskiy:so a lot of people who worked with Sam said that 很多与Sam共事过的人说,

——>> maybe he’s not the most direct person 他可能不是最直接、

——>> in terms of being honest with them, 最诚实的人,

——>> and they had concerns about his views and safety 他们对他的观点和安全性有所担忧,

——>> that’s part of it, 这是部分原因,

——>> so they wanted more control 所以他们想要更多控制权,

——>> they wanted more concentration and safety, 想要更专注于安全,

——>> but also it seems that anyone who leaves that company 但似乎任何离开那家公司

——>> and starts a new one, gets a 20 billion dollar valuation 并创办新公司的人,仅仅因为创业就能获得200亿美元的估值,

——>> just for having it started, you don’t have a product 你还没有产品,

——>> you don’t have customers, 没有客户,

——>> but if you want to make many billions of dollars, just do that, 但如果你想赚几十亿美元,就这么干,

——>> so it seems like a very rational thing to do for anyone who can, 所以这对任何有能力的人来说,似乎都是一件非常理性的事,

——>> so I’m not surprised that there is a lot of attrition [ə’trɪʃn], 因此我对有这么多人离职并不感到惊讶。

Attrition:gradual reduction or weakening in number, strength, or effectiveness. (消耗、自然减员):指数量、力量或效能的逐渐减少或削弱。

——>> meeting him in person, he’s super nice, very smart, 就我本人见过他而言,他非常友善,非常聪明,

——>> absolutely perfect public interface, you see him testify in a senate [‘senət], 拥有完美的公众形象。你看他在参议院作证,

——>> he says the right thing to the senators, you see him talk to the investors, 他对参议员们说了正确的话;你看他与投资者交谈,

——>> they get the right message. 他们得到了他们想听的信息。

——>> but if you look at what people who know him personally are saying, 但如果你看看那些认识他本人的人怎么说,

——>> he’s probably not the right person to be controlling a project of that impact, 他可能不是控制如此影响力项目的合适人选。

Host:Why? 为什么?

Dr. Roman Yampolskiy:He puts safety second. 他把安全放在第二位。

Host:Second to? 次于什么?

Dr. Roman Yampolskiy:Winning this race to superintelligence, 赢得这场通往超级智能的竞赛,

——>> being the guy who created Godic and controlling 成为那个创造了”神明”并控制

——>> light cone [kəʊn] of the universe. He’s worse. 宇宙光锥的人。他更糟糕。

Associated with ultimate control or power in a speculative or scientific sense. (“控制宇宙的光锥”则暗示对宇宙事件或因果关系的掌控。)

“being the guy who created Godic and controlling light cone of the universe” 描绘了一个形象:此人不仅是某个堪称神迹的体系(Godic)的创造者,更掌控着宇宙最根本的因果法则(光锥),其权力和影响力达到了物理规律的层面。

Host:Do you suspect that’s 你怀疑

——>> what he’s driven by is by the legacy of being an impactful person 他的驱动力是成为有影响力、

——>> that did a remarkable thing 做了非凡之事的传奇人物,

——>> versus the consequence that that might have for society? 而不是那可能对社会造成的后果?

——>> Because it’s interesting that his other startup is worldcoin, 因为这很有趣,他的另一个初创公司是Worldcoin,

——>> which is basically a platform to create universal basic income, 基本上是一个创建全民基本收入的平台,

——>> a platform to give us income in a world 一个在人们没有工作的世界里

——>> where people don’t have jobs anymore. 为我们提供收入的平台。

——>> So on one hand, you’re creating an AI company, on the other hand 所以一方面,你在创建一家AI公司,另一方面

——>> you’re creating a company that is preparing for people not to have employment. 你在创建一家为人们失业做准备的公司。

Dr. Roman Yampolskiy:It also has other properties, 它还有其他特性,

——>> it keeps track of everyone’s biometrics [ˌbaɪəʊ’metrɪks]. 它跟踪每个人的生物特征信息。

——>> It keeps you in charge of a world’s economy, 它让你掌控了世界经济,

——>> world’s wealth, by retaining a large portion of world coins, 世界财富,通过保留大量的Worldcoin,

——>> so I think it’s kind of very reasonable part 所以我认为这是整合”世界主导权”

——>> to integrate with world dominance, 非常合理的一部分,

——>> if you have a superintelligent system, 如果你有一个超级智能系统,

——>> and you control money, 并且你控制着货币,

——>> you’re doing well. 那你就做得很好。

Host:Why would someone want world dominance? 为什么有人会想要主导世界?

Dr. Roman Yampolskiy:People have different levels of ambition, 人们的野心程度不同。

——>> then you’re a very young person with billions of dollars, fame, 当你是一个拥有数十亿美元、名声显赫的年轻人时,

——>> you start looking for more ambitious projects, 你开始寻找更宏大的项目,

——>> some people want to go to Mars, 有些人想去火星,

——>> others want to control light cone of a universe. 其他人想控制宇宙的光锥。

欧文说:Okay, let’s break down this intense conversation. Dr. Yampolskiy argues that if developers truly understood the existential risk, self-preservation would kick in, especially for the young, wealthy individuals leading these projects. He suggests focusing on beneficial narrow AI instead of racing towards superintelligence.

He dismantles counter-arguments: unlike nuclear weapons, which are controlled tools, superintelligence is an autonomous agent that cannot be simply “turned off.” He expresses deep skepticism about achieving control or safety, viewing it as an impossible problem. He finds it irrational that developers proceed with such high stakes, comparing it to taking a 1% risk of death for a monetary reward.

Regarding solutions, he is pessimistic about legislation and believes creating a “surveillance planet” is infeasible. His primary hope is to convince those in power through personal self-interest—that they too will die in a catastrophe. For the public, he recommends supporting movements like “Pause AI” and, on a personal level, living meaningfully and pursuing impactful work, given the uncertain future. He also reiterates his strong belief in the simulation theory, seeing our rapid AI and VR advancements as precursors to creating indistinguishable simulated worlds.